Demystifying the Generative AI and LLM Buzz in Security Products: The Future or Just Grains of Salt?

A deep dive into recent implementations of Generative AI and use of LLMs for security products and a peak into the future.

Welcome to The Cybersecurity Pulse 🖥️! Before we dive into the exciting stuff, why not subscribe? I run a newsletter that brings you the latest from the crossroads of innovation and cybersecurity, and I also maintain an Intelligence Hub filled with curated research and data points about the industry. Your subscription tells me that what I'm writing resonates with you and adds value to your day. Your support goes a long way!

Table of Contents 📚

Introduction

Over the past several months, the generative AI hype train has spiraled out of control across every industry it seems. In the cybersecurity industry, this has manifested in an influx of vendors and cloud providers harnessing Large Language Models (LLMs) like ChatGPT, and even developing their own LLMs (i.e., Google Sec-PaLM LLM and BigID’s BigAI). Although these implementations aren't a silver bullet, they’ve become promising as force multipliers, increasing operational speed and efficiency, enhancing threat and incident response, identifying vulnerabilities in code, and more. However, we're merely at the dawn of this transformative journey, and it's natural that there is skepticism among the security community, especially after generative AI seemed to steal the show at RSA. We must remember that no single technology is a panacea, and the complexity of cybersecurity still demands significant human intervention.

In this post, we’ll navigate the themes and different use cases seen in recent implementations of generative AI and the use of language models in security products. This post aims to demystify the recent uptick of generative AI implementations in security products while providing you with a mental framework on how to evaluate the significance of these solutions and whether they can help your organization out.

In a follow-up post, I’ll be covering the promising potential of custom in-house autonomous security agents. Now let’s get onto the fun!

Setting the Stage

AI and Machine Learning (ML) have been part of the cybersecurity landscape for a while, with early implementations like Cognitive Security's behavioral analysis tool dating back to 2013. However, it's been challenging to separate the wheat from the chaff, with some vendors making lofty claims about their AI-powered solutions. This has understandably led to some skepticism in the industry.

Despite our inherent skepticism of security vendors as security practitioners, the recent surge in generative AI applications in security solutions is encouraging. And while no single security solution will ever be a cure-all, there are several tools that can boost security posture and enable teams to work more efficiently and confidently.

Commonly Used Terms

Generative AI: This subset of artificial intelligence uses machine learning techniques to create new content. It can generate text, images, or sounds that mirror the data it was trained on. In the context of cybersecurity, Generative AI can be used to simulate cyber attacks for testing purposes or to generate phishing emails for training exercises.

Large Language Model (LLM): This is a specialized form of generative AI that's trained on extensive text and code datasets. Language models (LMs) can understand, summarize, generate, and predict content. In cybersecurity products, LMs can be used to analyze and predict potential security threats based on patterns in large datasets. For example, they can help identify suspicious activities or behaviors by analyzing network traffic or system logs.

Criteria

There are endless ways in which a security solution can leverage AI, therefore, it's essential to discern the type of AI at play. The focus of this post is on generative AI, but how do we distinguish it from other types of AI? Here's the lens we'll use:

Content Generation: If the product generates new content, it's likely using generative AI.

Data Analysis: If the product solely analyzes data for pattern identification, correlation, and/or predictive analytics, it's likely using another form of AI.

Functionality: If the product is primarily creating content such as generating phishing emails for training, simulating cyber attacks for testing, crafting detailed incident reports, or even translating natural language commands into executable code, it's likely using generative AI. If it's primarily analyzing data, identifying potential threats, or making decisions based on data analysis, it's likely using another form of AI.

For example, Dataminr's Pulse for Cyber Risk service uses deep learning and real-time detection models to analyze a wide range of data formats, including text, image, video, sound, and sensor data. It can understand text in over 150 languages, recognize images and logos, process audio, and detect anomalies. Their solution focuses on physical and cyber threats. Impressive, right? However, this isn't generative AI. The type of AI leveraged here is more about gathering, analyzing, and correlating existing data. Generative AI might create new threat scenarios based on analyzed data or write detailed reports summarizing detected threats.

This is the kind of scrutiny I applied when looking at security products claiming to use generative AI. This is the approach I used to identify the themes and use cases discussed in this post.

Themes

In this section, we'll explore the five key themes that have emerged in the integration of generative AI into security products. Themes are the broad areas where AI can make a significant impact. Within each theme, there are specific use cases - these are the actual real-world applications where we can leverage generative AI to solve real security challenges. Think of the themes as the big picture strategies, and the use cases as the specific tactics used to achieve those strategies and objectives.

Summarizers, Explainers, and Advisors

Generative AI and LLMs can be used to provide concise summaries, detailed explanations, and expert advice. This can greatly enhance the efficiency and effectiveness of incident response and vulnerability management.

Incident Response Post-mortems: Generative AI can analyze the details of a security incident and generate a concise, easy-to-understand summary of what happened, why it happened, and what the impact was. It can also suggest remediation steps and preventative measures to avoid similar incidents in the future.

Vulnerability Explanation and Remediation Step Advisor: Generative AI can provide detailed explanations of identified vulnerabilities, including their potential impact and the conditions that make a system susceptible to them. Furthermore, it can suggest the most effective steps to remediate a vulnerability, taking into account the specific context and constraints of the situation. This dual role of explaining and advising makes it a powerful tool in vulnerability management.

Natural Language Processing (NLP) to Code Generation

Generative AI can translate natural language (plain text) commands or descriptions into executable code, streamlining the implementation of security policies and enhancing threat detection.

Policy Enforcement: Generative AI can generate code to enforce specified security policies, translating human-readable policy descriptions into machine-executable code.

Threat Detection Rule and Query Generators: Generative AI can generate rules and queries for threat detection systems based on natural language descriptions of threats, improving the speed and accuracy of threat detection.

Code Review and Vulnerability Detection

Generative AI can scrutinize source code to identify potential vulnerabilities, bugs, or violations of coding standards, enhancing the security and quality of software.

Bug Detection: Generative AI can analyze code to find bugs that might have been overlooked during manual code reviews. It can also generate suggestions for bug fixes, improving overall code quality.

Vulnerability Identification: Generative AI can scan code to identify potential vulnerabilities and generate patches or suggest modifications to rectify these vulnerabilities.

Reverse Engineering

Generative AI can be used to deconstruct and analyze compiled code or hardware, often in the context of malware analysis. It can generate detailed reports on how a piece of malware functions and how to defend against it.

Malware Analysis: Generative AI can analyze malware to understand its functionality, identify its command and control servers, and determine its impact. It can also generate remediation strategies, helping to develop effective defenses.

Prioritization

Generative AI can analyze large volumes of data to identify the most important issues or threats, generating detailed reports that help security teams focus their efforts where they're most needed.

Data and Log Sifting: Generative AI can analyze large volumes of logs and other data to identify potential threats or anomalies. It can generate alerts or reports that highlight these potential threats, effectively finding the "needle in the haystack."

Vulnerability and Patch Management Prioritization: Generative AI can assess the severity and potential impact of identified vulnerabilities, generating prioritized lists of issues to address and patches to apply.

Real-World Use Cases & Implementations

The use cases below showcase the applications of generative AI and LLMs in cybersecurity and align with the key themes identified. However, this isn't an exhaustive list. Also, I'm not affiliated with any of these vendors, nor have I tested all of these solutions. This overview is to illustrate real-world applications, not to endorse specific products.

When evaluating AI security solutions (or any solution), you should be critical of the robustness of the solution. Ask the vendor questions about accuracy, memory recall, data training and model quality, regulatory compliance, scalability, integration, compatibility, etc. This will help you root out the weaker contenders and identify solutions that leverage robust AI practices to solve real-world security challenges.

As with any security solution you onboard, you’ll want to continuously validate the findings and alerts to test the quality and accuracy of the solution. You’ll also want to ensure that your security teams have the knowledge and skills needed to get the highest ROI possible out of the solution. Now, let’s take a look at some use cases!

Threat Detection and Analysis

Generative AI and LLMs can be trained to understand and predict patterns in network traffic or user behavior. By processing vast amounts of data, they can identify unusual patterns, potential threats, or breaches, often more quickly and accurately than traditional methods. They can also generate reports on potential threats, explaining their potential impacts and suggesting mitigation strategies. This falls under the themes of Summarizers, Explainers, and Advisors, NLP to Code Generation, and Prioritization.

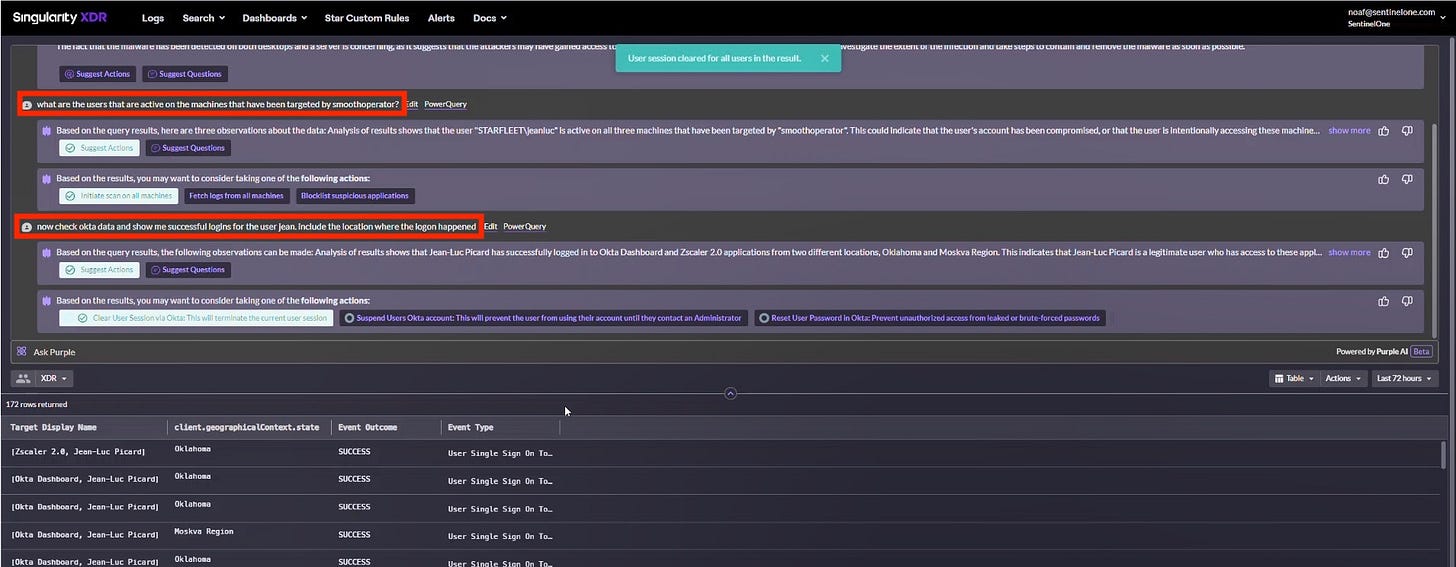

SentinelOne

SentinelOne demonstrates AI-powered threat hunting with their release of Purple AI. It enables analysts to use prompts such as “Is my environment infected with SmoothOperator?”, or “Do I have any indicators of SmoothOperator on my endpoints?” to hunt for activity and Indicators of Compromise (IoC) related to a specific named threat. You can read more about their implementation in this article.

Nozomi Networks

Nozomi Networks has implemented AI-powered insights with an Insights Dashboard where alerts are automatically correlated, prioritized, and supported with root-cause information. They also offer AI-based queries and analyses, allowing users to leverage natural language queries and get answers to common questions about vulnerabilities, network assets, and other environmental details. Advanced predictive monitoring provides early warnings about system behaviors that deviate from the norm. You can read more about their implementation in this article.

Software Supply Chain Security

Generative AI and LLMs can be used to read and understand software code, potentially identifying areas of vulnerability that human analysts might miss. They can also generate patches or suggest modifications to rectify these vulnerabilities. This falls under the themes of Code Review and Vulnerability Detection and Prioritization.

Veracode

Veracode Fix is another example of AI application in software supply chain security. Veracode Fix uses a generative pre-trained transformer engine to provide automatic code suggestions on how to remediate security flaws discovered after scanning software. It has been trained using Veracode’s already existing knowledge base of more than 140 trillion lines of code and its 17 years of security research. This article provides more details on their implementation.

Codium AI

TestGPT from Codium AI is an IDE extension that enables an iterative process of generating tests and then tweaking code based on the outcomes of those tests. This interaction with the developer helps the tool understand the code better and generate more accurate and meaningful tests while guiding the developer to write better code. More about their implementation can be found in this VentureBeat article.

GitLab

GitLab has introduced a new security feature that leverages AI to explain vulnerabilities to developers, streamlining the process of identifying and remediating security risks in code. You can read more about their implementation in this TechCrunch article.

Threat Intelligence

Generative AI and LLMs can be used to analyze vast amounts of threat intelligence data, identifying key threats and providing detailed, real-time assessments of the threat landscape. This falls under the themes of Threat Detection and Prioritization, and Summarization and Explanation.

Fletch AI

Fletch AI's solution aims to sift through the entire threat landscape to identify major threats before they appear in the news. It correlates threats to a company’s tech stack, geolocation(s), and industry exposure, and then acts as an early warning system by delivering a daily report of the threats most relevant to an organization. It also delivers personalized advice to prevent, fix, understand, and communicate threats. You can find more about their solution here, and here.

Recorded Future

Recorded Future recently released what it claims to be the first AI for threat intelligence. This tool uses OpenAI's GPT model to process threat intel and generate real-time assessments of the threat landscape. The model was trained on more than 10 years of insights taken from Recorded Future's research team and 100 terabytes of data from various sources. Their tool enriches threat intel by providing human users with generated reports they can use to gain more context around security incidents that may impact their organization and how to respond effectively. You can read more about their implementation here.

Incident Response

In the aftermath of a cybersecurity incident, generative AI and LLMs can be used to generate detailed, understandable reports, helping stakeholders to understand what happened, how it was resolved, and what steps need to be taken to prevent a similar incident in the future. This falls under the theme of Summarizers, Explainers, and Advisors.

Microsoft

Microsoft introduced Microsoft 365 Defender Automation Attack Disruption which can scan multiple endpoints, quickly identifying red flags such as suspicious email locations. Once a threat is detected, it can isolate the compromised account and halt all transactions. This tool enhances incident response by automating the detection and containment of threats, reducing the time and effort required by human analysts.

Data Security

Generative AI and LLMs can help identify, classify, and safeguard sensitive data by monitoring access, leveraging data classification, encryption, and anonymization, ensuring robust protection for highly sensitive data. This aligns with the Prioritization theme because of course, you want to prioritize security for sensitive data.

BigID's BigAI

BigID has launched BigAI, a privacy-by-design LLM designed to discover and protect sensitive data. BigAI scans structured and unstructured data, whether stored in the cloud or on-premises, using a mix of ML-driven classification and generative AI to suggest titles and descriptions for data tables, columns, and clusters so they’re easier to locate via search. More about their implementation can be found in this article.

Cado Security's MaskedAI

Cado Security has debuted MaskedAI, an open-source library that enables the use of Large Language Models (LLM) APIs, such as OpenAI/GPT-4 more securely without sending out sensitive information. Masked-AI replaces sensitive data with a placeholder and sends the masked request to the API. The solution stores a lookup table locally to then later reconstruct the API output to include the sensitive data for the user to consume. More about their implementation can be found in this article.

Cloud Provider Implementations

Cloud providers are perfectly positioned to tap into the game-changing potential of Generative AI and LLMs. With their vast computational resources, treasure troves of data, and teams of PhDs, they are uniquely positioned to bring to market advanced security solutions that can reach a global audience. In this section, we dive into the work of Google and Microsoft, two titans that are leveraging Generative AI and LLMs to redefine the landscape of cybersecurity.

Notice how I didn’t include AWS. The reason is that they’ve been slow to move on this front and haven’t publicly announced any security features that leverage these technologies. Were they caught off guard or are they waiting to see what’s worth their investment? 🤔

Google Cloud

Google Cloud launched the Google Cloud Security AI Workbench at RSA, a new platform backed by the Sec-PaLM LLM which is specifically fine-tuned for security use cases. The model leverages Google’s massive trove of insights into the security landscape paired with Mandiant’s threat intelligence from being out in the trenches for nearly two decades. What stood out to me the most is that they may soon allow customers to privately connect their own data to the LLM. Below are the use cases covered in their release, broken down into themes:

Summarizers, Explainers, and Advisors

Chronicle AI: Uses generative AI to summarize query results and provide an interactive interface for exploring security events.

Mandiant Threat Intelligence AI: Summarizes key insights and Indicators of Compromise (IoCs) across threat intel reports, providing a concise overview of potential threats.

Security Command Center Attack Path Simulation: Generates human-readable summaries and explanations of attack paths, providing a clear overview of potential threats and vulnerabilities.

Natural Language Processing (NLP) to Code Generation

Chronicle AI: Transforms natural language into actionable queries and detections, allowing users to interact with billions of security events.

Assured OSS: Enhances Google Cloud’s OSS vulnerability management solution by adding more coverage for open-source software (OSS) packages.

Mandiant Threat Intelligence AI: Employs generative AI to quickly find, summarize, and act on threats relevant to your organization, translating natural language descriptions into actionable insights.

Reverse Engineering

Malware Analysis: VirusTotal’s Code Insight leverages Google Cloud’s Security AI Workbench and the Sec-PaLM LLM to deconstruct and analyze potentially harmful files. It then describes the findings in simple human language. A task that would previously take an experienced reverse engineer at least a couple of hours depending on the complexity of the file.

Microsoft Azure

Microsoft introduced a new tool called Security Copilot. This tool is powered by OpenAI's GPT-4 generative AI and Microsoft's own security-specific model, marking a significant stride in Microsoft's ongoing efforts to incorporate AI-oriented features for end-to-end defense. Below are some of the features introduced in Security Copilot:

Natural Language Processing (NLP) to Code Generation

Threat Detection Rule and Query Generators: Security Copilot allows users to ask about suspicious user logins over a specific time period in plain language. This feature leverages generative AI to translate natural language into queries, enabling the system to sift through vast amounts of data and identify potential security threats.

Summarizers, Explainers, and Advisors

Incident Response Post-mortems: Security Copilot can be employed to create a PowerPoint presentation outlining an incident and its attack chain. This feature uses generative AI to analyze the details of a security incident and generate a concise, easy-to-understand summary of what happened, why it happened, and what the impact was.

Code Analysis

Threat Analysis: Security Copilot can accept files, URLs, and code snippets for analysis. This feature leverages generative AI to scrutinize the provided code or content, identify potential vulnerabilities or threats, and provide detailed reports on the findings.

Reverse Engineering

Malware Analysis: Security Copilot has the capability to reverse engineer exploits. This feature uses generative AI to deconstruct and analyze potentially harmful files or code, providing detailed insights into how a piece of malware functions and how to defend against it.

Also, it’s key to note that Microsoft’s 3-day developer conference, Microsoft Build, kicks off today and while it’s not security-focused, you can bet your top dollar that generative AI will be a key theme. The show starts at 12PM ET with a keynote from CEO Satya Nadella, you can watch it live here. Maybe we’ll see some cool new stuff for Security Copilot and/or GitHub Copilot? 🤔

Other Use Cases to Consider

This next section quickly covers a number of potential use cases that I didn’t touch on in the previous section.

Red Team Use Cases

Penetration Testing: LLMs can be used to automate parts of the penetration testing process. This could involve anything from identifying potential attack vectors to actually executing the attack, such as by exploiting known vulnerabilities or launching brute-force attacks.

Social Engineering Attacks: Generative AI can be used to craft convincing phishing emails or other types of deceptive communication. It could also potentially be used for vishing (voice phishing), by mimicking a person's voice to trick a victim into revealing sensitive information.

Automating Exploit Generation: LLMs could potentially be trained to generate new exploits based on known vulnerability types. This could allow a red team to quickly develop a wide range of attacks for testing purposes.

Password Cracking: LLMs can be used to generate lists of potential passwords based on patterns identified in previous data breaches. This could make brute-force attacks more efficient by focusing on the most likely passwords first.

Automating Reconnaissance: LLMs could automate the process of gathering and analyzing publicly available information about a target, which is often the first step in planning an attack.

Miscellaneous Use Cases

Security Awareness Training: Generative AI can create realistic phishing emails, spoof websites, or other potential security threats for training purposes. This can help employees to recognize and respond appropriately to these threats, thereby reducing the risk of successful cyber attacks.

Policy and Compliance Management: LLMs can be used to read, understand, and summarize complex regulatory documents, helping organizations to ensure that their cybersecurity policies and practices are in compliance with relevant laws and standards.

Metrics

Before we close out, I’d like to talk to you about your car’s extended warranty. Kidding. Metrics. If you can’t measure it, you can’t track it. Most mature security and product organizations heavily rely on metrics to make better-informed decisions and to let them know whether they are headed in the right direction. Keep in mind that the impact that any solution will have on metrics will vary on implementation, the context in which it is used, and most importantly, the adeptness of the people leveraging the technology.

Below are 7 metrics generative AI security solutions can help with:

Time to detection

Time to remediation

Time to threat containment

Time to production

Time to recover

False Positive/Negative Rates

Incident response time

Conclusion

If you're feeling a bit skeptical about Generative AI and LLMs, I get it. After all, they're not perfect and can sometimes produce results that aren't quite up to scratch. But don't let that deter you because you’ll inevitably end up leveraging these types of solutions. There's a lot of work being done to prevent AI "hallucinations", for example, NVIDIA's NeMo Guardrails. And when it comes to data privacy and security, as long as you're following the right protocols, these technologies can be a powerful asset. Team8 has come up with a great guide on how to manage generative AI risks in the enterprise.

Generative AI-backed security solutions are by no means a magic wand, but they can help in a number of ways. They can help with SecOps, Incident Response, Offensive Security, and Software Supply Chain Security, and they help bridge the skills gap in cybersecurity. And let's not forget, our adversaries are already using these technologies. So, why shouldn't we? 🤷🏾♂️

In a follow-up post, I'll be diving into the world of custom in-house autonomous security agents and why I believe they're the future of cybersecurity. But I'd love to hear your thoughts. What do you think about all this? Have I missed anything important? Your feedback is always welcome. I’d love to keep the conversation going!

Enjoyed this post? I'd be thrilled if you could subscribe and share it with your network. I create these posts in my spare moments, and seeing the reader community expand truly fuels my motivation to write more. And if you're feeling particularly generous, you could even buy me a coffee ☕ . Thanks for reading, your support means a ton!

![Visual Studio Code

Create tests for function

1 def gaussian_elimination

Test code

def test_valid_input (self) :

coefficients - np.array ( [ [2, 11, [3,

vector " np.array(([[5], [611)

Copy All

2

3

4

5

6

7

8

9

10

coefficients: NDArrayL float64A , vector: NDArrayj floaE64f

NDArrayß float641 :

if vector

(0,

d typevfloat

return np array

rows, columns rip D shape coefficients)

# augmented matrix

result - vector)

assert result . all() -T np.array( [ [Q.l,

def (self):

coefficients - np.array ( [ [2, 11, [3, 41])

vector - np.array( [[5, 6]])

gaussian_elimination ( coefficients, vector)

result -

• np.array ( [ ] ) . all()

assert result. all()

def c reat ion (self) :

coefficients np.array( [ [2, 11, [3, 41])

vector - np.array( [[51, [6]])

Copy

Copy

Copy

augmented_mat - create_augmented_mat rix( coefficients, vecto

assert augmented_mat. all() np.array( 1., 5.1, 4, Visual Studio Code

Create tests for function

1 def gaussian_elimination

Test code

def test_valid_input (self) :

coefficients - np.array ( [ [2, 11, [3,

vector " np.array(([[5], [611)

Copy All

2

3

4

5

6

7

8

9

10

coefficients: NDArrayL float64A , vector: NDArrayj floaE64f

NDArrayß float641 :

if vector

(0,

d typevfloat

return np array

rows, columns rip D shape coefficients)

# augmented matrix

result - vector)

assert result . all() -T np.array( [ [Q.l,

def (self):

coefficients - np.array ( [ [2, 11, [3, 41])

vector - np.array( [[5, 6]])

gaussian_elimination ( coefficients, vector)

result -

• np.array ( [ ] ) . all()

assert result. all()

def c reat ion (self) :

coefficients np.array( [ [2, 11, [3, 41])

vector - np.array( [[51, [6]])

Copy

Copy

Copy

augmented_mat - create_augmented_mat rix( coefficients, vecto

assert augmented_mat. all() np.array( 1., 5.1, 4,](https://substackcdn.com/image/fetch/$s_!FTp5!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fcabb6b3b-ea65-48bf-b7fa-b4ab0913e532_1974x918.png)