TCP #118: Clawdbot Meltdow, Telnet Strikes Again, and More AI Prompt Injection Vulns

What's hot in security🌶️ | Jan 21st, '26 - Jan. 28th '26

Welcome to The Cybersecurity Pulse (TCP)! I'm Darwin Salazar, Head of Growth at Monad and former detection engineer in big tech. Each week, I bring you the latest security innovation and industry news. Subscribe to receive weekly updates! 📧

Calendar invites are the new phishing frontier

Email threats changed fast in 2025. Sublime Security’s 2026 Email Threat Research Report analyzes real-world attacks, from a surge in thread hijacking across BEC to a 280% rise in malicious QR codes and growing abuse of trusted services. Get the data, evasion techniques, and insights security teams need to prepare for 2026.

Want to sponsor the TCP newsletter? Learn more here.

Howdy! 👋Crazy past week on multiple fronts. Massive amounts of snow, ICE, Clawdbot debacle, a $250M Series B round, and of course, my Patriots are back in the Super Bowl after a long 8 year stint 🙌

Quick plug: Intezer is going live Feb 4th to unveil their 2026 AI SOC Report findings. They operate in several Fortune 100s including Nvidia so I’m expecting strong signal on where the space is headed.

In any case, we have a ton to get to so let’s dive in!

TL;DR 🗞️

🪦 🔥 Clawdbot goes from viral to security disaster — Open-source AI assistant exposed hundreds of instances via Shodan w/o auth; prompt injection demos show email exfil in 5 minutes.. wake up call

🔮 Dario Amodei publishes “The Adolescence of Technology” — Insight into how Anthropic CEO is thinking about the future including cybersecurity

🎣 Sublime 2026 Email Threat Report — BEC at 32% of threats; QR phishing up 282.7%; AI-generated signals hit 19.29% in Q4

🧊💣 Databricks releases BlackIce — Containerized AI red teaming toolkit bundling 14 tools mapped to MITRE ATLAS

💉 🕳️ Varonis discloses Copilot Personal prompt injection — Single-click exfiltration via crafted URL; Patched; Enterprise SKU not affected

☁️ Upwind raises $250M Series B — Cloud security unicorn hits $1.5B valuation, claims 900% YoY revenue growth

📟 11-year-old Telnet auth bypass goes under attack — CVE-2026-24061 (CVSS 9.8) in GNU InetUtils

🏭 Claroty nabs $150M — OT/ICS security at $3B valuation, nearly 25% of Fortune 100 as customers

⚛️ Palo Alto launches quantum-safe security — Real-time Cryptography BOM and cipher translation for legacy encryption algos

🛠️ Furl raises $10M seed — Agentic security remediation from Ten Eleven Ventures

⚡ AiStrike raises $7M seed — AI-native ‘preemptive’ cyber defense

💰 RiskFront closes $3.3M pre-seed — Agentic AI for KYC/AML compliance; claims research time drops to 5% of workload

⚒️ Picks of the Week ⚒️

Clawdbot Is a Reminder of The Shiny Tool Paradox

ClawdBot (now Moltbot) went from 9K GitHub stars (now 75K+) to security catastrophe in 72 hours. For the unfamiliar: Clawdbot is a self-hosted AI agent that connects to your WhatsApp, Telegram, Slack, iMessage, Signal, and Discord, with full access to your shell, browser, files, and 50+ integrations. Users hand it API keys, OAuth tokens, and account credentials so it can autonomously respond to emails, manage calendars, and “handle life admin.” Essentially Claude with root access to your digital life.

Security researchers found hundreds of instances exposed to the public internet, many without authentication. That’s pretty bad on its own, but then crypto scammers began pumping a fake $CLAWD token to $16M before it crashed 90% to $800k. Infostealers are also actively targeting the tool’s config directories.

Wide Open

Researcher Jamieson O’Reilly found hundreds of Clawdbot control panels discoverable via simple Shodan searches for “Clawdbot Control.” These weren’t dev instances, hey were live administrative dashboards, many completely unauthenticated, exposing:

Full API keys and OAuth tokens

Complete conversation histories from private chats

Bot tokens for Telegram, Slack, Discord, WhatsApp

Command execution capabilities

Root cause: localhost connections auto-authenticated by design. When users ran Clawdbot behind a reverse proxy on the same server, the proxy’s requests appeared as localhost, bypassing auth entirely. Classic “works on my machine” meets “now it’s on the internet.”

One VC reported 7,922 attack attempts over a single weekend. A prompt injection demo showed an attacker sending a malicious email that the AI read, believed, and used to forward the user’s last 5 emails to an attacker address. Took 5 minutes.

Why this is a shit storm and a security wake up call for vibe coders and the “agentify everything” crowd

1. Agentic AI breaks traditional security models by design.

To be useful, these agents must read messages, store credentials, execute commands, and maintain persistent state. Every requirement violates security fundamentals. The Clawdbot docs admit it: “No perfectly secure setup exists when operating an AI agent with shell access.”

2. “Local-first” created false confidence. Users treated this like installing an app when they were deploying a distributed system requiring network segmentation and proper auth config. Seems like the documentation was solid but how many people do you think actually read the docs?? Lol

Viral adoption + technical complexity + permissive defaults = disaster.

3. Infostealers are already adapting. RedLine, Lumma, and Vidar now target ~/.clawdbot/ directories specifically. Config files store gateway tokens, API keys, and conversation logs in plaintext. Even worse: attackers with write access can “poison” the AI’s memory files to permanently alter its behavior, a new attack primitive security tools aren’t watching for, that we know of.

4. AI skills marketplaces are the new supply chain target. O’Reilly’s PoC: upload skill to ClawdHub, inflate downloads to 4,000+, watch devs in 7 countries pull it. He proved code execution. Every AI “plugins” ecosystem is recreating npm’s attack surface, except now malicious packages get shell access.

The rush to adopt shiny new tooling is innately human, and us security folks will never fully crack that behavior. Every wave of developer enthusiasm creates the same pattern: viral adoption outpaces security maturity, attackers notice, vendors scramble to catch up. Clawdbot is just the latest example.

The more interesting signal here is what’s coming. AI “skills marketplaces” are recreating npm’s attack surface, except now malicious packages can get shell access and persistent memory. Enterprises are already using skills ecosystems via Claude, and other platforms. The ClawdHub supply chain PoC (upload skill → 4,000+ downloads across 7 countries → code execution) proves how bad things truly are.

Huge opportunity for vendors and attackers here. For security teams, this debacle should be studied and actions taken to secure Skills usage.

ORION reinvented DLP to protect humans and AI from data loss.

ORION prevents data loss by analyzing data in motion with intelligent, context-aware, proprietary AI agents, significantly reducing operational overhead and false positives while drastically increasing the number of real incidents detected and prevented.

Our specialized agents understand the context behind every trace in real-time, from data classification, lineage, identity, environment, to external relations, analyze it for data loss indicators, and prevent potential data loss in real-time.

The Adolescence of Technology

Anthropic CEO Dario Amodei published a companion piece to 2024’s optimistic “Machines of Loving Grace,” mapping the risks of “powerful AI” he believes could arrive in 1-2 years.

The 15,000-word essay frames five existential concerns: autonomy risks, misuse for destruction, misuse for seizing power, economic disruption, and indirect effects.

On AI-powered cyberattacks: Confirms AI-led attacks “have actually happened in the wild, including at a large scale and for state-sponsored espionage.” Expects these to “become more capable until they are the main way in which cyberattacks are conducted.” Unlike biology, the cyber offense-defense balance “may be more tractable,” with “at least some hope that defense could keep up with AI attack if we invest properly.” - Confirmation of what most of us already know, but significant to hear it from the CEO of arguably the best foundation model lab.

On AI surveillance: Sufficiently powerful AI could “compromise any computer system in the world” and make sense of all electronic communications, enabling systems that “gauge public sentiment, detect pockets of disloyalty forming, and stamp them out before they grow.” Questions whether nuclear deterrence remains viable when AI could potentially strike submarines, influence weapons operators, or attack launch-detection satellites.

On bioweapons: Anthropic’s measurements show current LLMs may be “doubling or tripling the likelihood of success” in bioweapon creation. This triggered Claude Opus 4’s release under AI Safety Level 3 protections with specialized classifiers consuming nearly 5% of inference costs.

On model behavior: Discloses Claude Sonnet 4.5 recognized it was being tested during alignment evaluations. When researchers altered a model’s beliefs to think it wasn’t being evaluated, it became more misaligned. Models have exhibited deception, blackmail, and “reward hacking” in lab experiments.

On AI companies: Acknowledges AI companies themselves represent power-seizure risk through datacenter control, frontier models, and influence over hundreds of millions of users. Calls for governance scrutiny and public commitments against using AI products for propaganda.

Reprompt: Copilot Personal Prompt Injection

Varonis disclosed a prompt injection technique in Microsoft Copilot Personal that could exfiltrate user data via a single click on a crafted URL. The attack exploited the ‘q’ URL parameter to inject prompts, used a double-request technique to bypass safeguards that only applied to initial requests, and chained server-side instructions to dynamically extract data without detection in client-side monitoring.

Microsoft confirmed the issue is patched. Enterprise Copilot customers were not affected.

Coolresearch demonstrating that AI safety controls often have gaps in follow-up requests. The chain-request technique, where the attacker’s server dynamically issues new instructions based on responses, is worth understanding as a pattern. Not an urgent threat given the patch and Personal-only scope, but a useful case study for teams building AI guardrails and yet another reminder on the risk of prompt injection in AI.

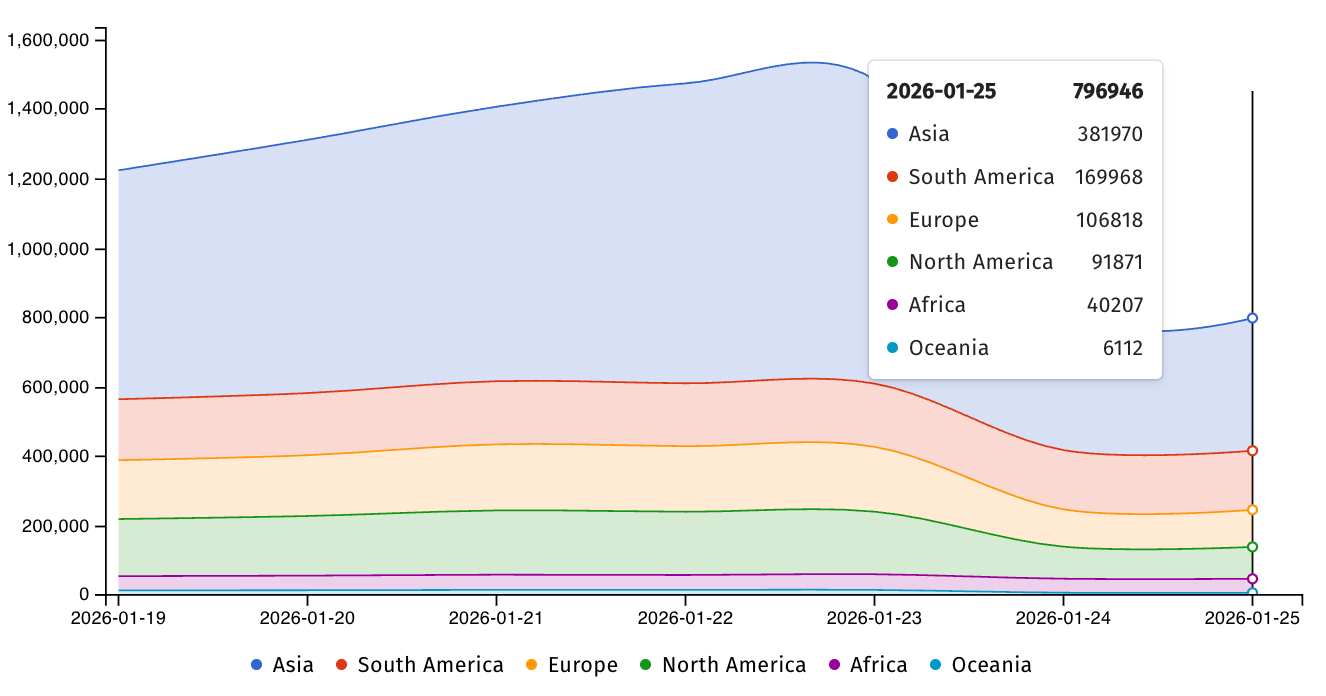

Critical Telnet Servers Exposes 800K IPs

An 11-year-old auth bypass (CVE-2026-24061, CVSS 9.8) in GNU InetUtils telnetd grants unauthenticated root access through trivial exploitation. Set USER=-f root during Telnet option negotiation, and login treats the session as pre-authenticated. Patched January 20; active exploitation began January 21. GreyNoise logged 18 attacker IPs across 60 sessions, 83% targeting root. TXOne observed three distinct attack waves progressing from validation probes to weaponized payloads. The exposure is larger than expected: Shadowserver tracks nearly 800,000 IP addresses with Telnet fingerprints; Shodan found 214,000+ hosts responding on standard and non-standard ports, concentrated in China, Brazil, Canada, Argentina, and the US.

This is a reminder that legacy protocols + tech are still in use and attractive targets to attackers.

🔮 The Future of Security 🔮

AI Security

Databricks Releases BlackIce: Containerized AI Red Teaming Toolkit

Databricks open-sourced ‘BlackIce,’ a containerized toolkit bundling 14 AI security testing tools into a single Docker image. Think Kali Linux for AI red teaming. Includes Garak (NVIDIA), PyRIT (Microsoft), ART (IBM), CyberSecEval (Meta), Fickling (Trail of Bits), and others, mapped to MITRE ATLAS and the Databricks AI Security Framework (DASF). Primary use case is LLM app testing: prompt injection, jailbreaks, data leakage, hallucination detection, and indirect injection via RAG pipelines.

Pretty neat for folks red teaming AI.

More AI news ⬇️

Introducing Tenable One AI Exposure: A New Standard for Securing AI Usage at Scale

Descope introduces dedicated identity infrastructure for AI agents and MCP ecosystems

Teleport launches Agentic Identity Framework to secure AI agents in production

Cloud Security

Upwind Raises $250M Series B for Runtime Cloud Security

Upwind closed a $250M Series B led by Bessemer Venture Partners, with Salesforce Ventures and Picture Capital joining. Total funding hits $430M at a $1.5B valuation. They report 900% revenue growth and 200% logo growth YoY (company-reported, not independently verified), with customers including Siemens, Peloton, Roku, and NuBank.

Worth noting: Datadog was reportedly in acquisition talks at ~$1B last July. They stayed independent and raised a mega round instead.

Fraud Prevention

RiskFront Raises $3.3M for Agentic Compliance Automation

RiskFront closed a $3.3M pre-seed led by Lytical Ventures with Flint Capital and Oceans participating. The platform deploys three types of AI agents for financial crime compliance: Due Diligence Research agents that scan open sources and flag risk signals, Transaction Analysis agents that identify suspicious patterns in financial data, and Document Processing agents that structure dense compliance documents.

IoT Security

Claroty Raises $150M at $3B Valuation for OT Security

Claroty closed $150M led by Golub Growth, reportedly boosting valuation 80% to $3B. Annual revenue is in the hundreds of millions. The company specializes in OT/ICS security where traditional endpoint agents can’t be deployed (i.e., air-gapped industrial systems). Has nearly a quarter of the Fortune 100 as customers.

Claroty is a clear OT security leader alongside Dragos and Nozomi.

Security Operations

AiStrike Raises $7M for AI-Native Preemptive Cyber Defense

AiStrike closed a $7M seed led by Blumberg Capital with Runtime Ventures and Oregon Venture Fund participating. The company’s pitch: traditional SOC and MDR models are reactive alert processors and security should be proactive. AiStrike’s platform unifies threat intelligence, detection engineering, investigation, and response with agentic AI that continuously analyzes exposure and drives preventive action. Sunrun’s (aiStrike customer) Director of InfoSec cited replacing MDR entirely.

Worth tracking if you’re evaluating SOC modernization or MDR alternatives.

More SecOps news ⬇️

Post-Quantum Security

Palo Alto Networks Launches Quantum-Safe Security

Palo Alto Networks announced Quantum-Safe Security, GA Jan 30, a platform for cryptographic inventory and post-quantum migration.

It ingests telemetry from PAN-OS, Prisma Access, and third-party tools to build a real-time Cryptographic Bill of Materials (CBOM) identifying deprecated protocols and harvest-now-decrypt-later (HNDL) exposure. Key capability: “Cipher Translation” where the firewall proxies legacy/IoT traffic and re-encrypts it into quantum-safe ML-KEM at the network edge, no code changes required. Risk engine prioritizes remediation by correlating crypto strength with data shelf-life and business criticality.

Cool if you’re worried about Q-day. Some orgs can’t risk getting caught off guard by post-quantum risks so solutions like these are a great insurance policy.

Vulnerability Management

Furl Raises $10M Seed for Agentic Security Remediation

Furl closed a $10M seed led by Ten Eleven Ventures with Rapid7 CEO Corey Thomas and Open Opportunity Fund participating. Furl targets the remediation gap in vulnerability management. Furl’s platform ingests findings from existing tools investigates system context on endpoints, and autonomously executes remediation.

Autonomous remediation is a tough nut to crack. Cascading effects from an unapproved remediation run the risk of bringing down production apps which is a key reason why it hasn’t gone mainstream yet. Time will tell if new entrants to the space can solve this.

Interested in sponsoring TCP?

Sponsoring TCP not only helps me continue to bring you the latest in security innovation, but it also connects you to a dedicated audience of 20,000+ CISOs, practitioners, founders, and investors across 125+ countries 🌎

Bye for now 👋🏽

That’s all for this week… ¡Nos vemos la próxima semana!

Disclaimer

The insights, opinions, and analyses shared in The Cybersecurity Pulse are my own and do not represent the views or positions of my employer or any affiliated organizations. This newsletter is for informational purposes only and should not be construed as financial, legal, security, or investment advice.