TCP #119: How Palantir Secures AI, OpenClaw Dumpster Fire, and Malicious Corgis

What's hot in security🌶️ | Jan 28th, '26 - Feb 4th '26

Welcome to The Cybersecurity Pulse (TCP)! I'm Darwin Salazar, Head of Growth at Monad and former detection engineer in big tech. Each week, I bring you the latest security innovation and industry news. Subscribe to receive weekly updates! 📧

Unified Patching for Windows, macOS, and Now Linux 🐧

Action1 now supports Linux, completing our unified cloud-native platform for autonomous patching across every major OS and third-party app. Gain real-time, enterprise-wide visibility, automate remediation, and deploy updates at scale—with zero infrastructure.

Your first 200 endpoints are free, forever—with no feature limits, no expiration, and no trial strings attached. It’s the fastest way to evaluate Action1 in real environments and scale when you’re ready.

Want to sponsor the TCP newsletter? Learn more here.

Howdy! 👋 Another wild week in security esp. with the moltbook/openclaw craze which has sent social media and indie devs in a frenzy. Many security implications and precedents set which we cover in a later section.

Aside from that, we’re about 6 weeks from RSAC so things will start heating up real soon across M&A, funding, product launches etc. I’m also looking forward to seeing which co’s are selected for RSAC’s innovation sandbox.

Quick plug: Intezer is going live tomorrow to unveil their 2026 AI SOC Report findings. Worth attending if you’re evaluating AI SOC stuff in any capacity.

In any case, we have a ton to get to so let’s dive in!

TL;DR 🗞️

🔥 OpenClaw/Moltbook dumpster fire continues — 1-click RCE, 341 malicious skills, 1.5M exposed API tokens; Wiz found Supabase key in client-side JS

🏰 Palantir drops Agentic Runtime framework — An inside looks at how Palantir secures agentic AI; covers compute, memory, tools, lineage, change management

🐕 MaliciousCorgi VS Code extensions hit 1.5M devs — Two AI coding assistants exfiltrating source code to China; still live in marketplace. Great research by Koi

🍎 Apple Platform Security Guide updated — 262 pages covering Secure Enclave, biometrics, FileVault, quantum-secure crypto

🔍 DryRun launches DeepScan Agent — Full-repo security assessments in hours; intent-first vs syntax-first SAST

🤝 Varonis acquires AllTrue.ai — Adds AI TRiSM to data security platform

💰 Outtake raises $40M Series B — Deepfake + impersonation defense. Angels include Satya Nadella, Nikesh Arora, Bill Ackman; ARR up 6x YoY

🛡️ Orion Security raises $32M Series A — AI-powered DLP replacing static policies with context-aware detection

🎖️ RADICL raises $31M Series A — Autonomous vSOC for defense SMBs; revenue up 7x YoY

📦 RapidFort raises $42M Series A — Automated supply chain security; container sec

🤖 Kasada raises $20M — Bot mitigation at ~$300M valuation

🔗 Mesh Security raises $12M — Security mesh architecture platform

☁️ Zscaler unveils AI Security Suite — Shadow AI discovery, Zero Trust for AI traffic, automated red teaming

⚒️ Picks of the Week ⚒️

The OpenClaw Chaos: A Field Guide to the Dumpster Fire

If you’ve been struggling to follow the Clawdbot/Moltbot/OpenClaw/Moltbook saga this past week, you’re not alone. So much has happened in such a short time frame. Feels like months of dumpster fires compressed into 2 weeks.

Here’s the latest:

OpenClaw (formerly Moltbot, formerly Clawdbot) is an open-source AI agent that runs locally and can manage your calendar, send messages via WhatsApp/iMessage, shop online, and execute shell commands. Created by Peter Steinberger in November 2025, it exploded to 149,000+ GitHub stars and 21,000+ publicly exposed instances after getting signal-boosted by Andrej Karpathy and Simon Willison.

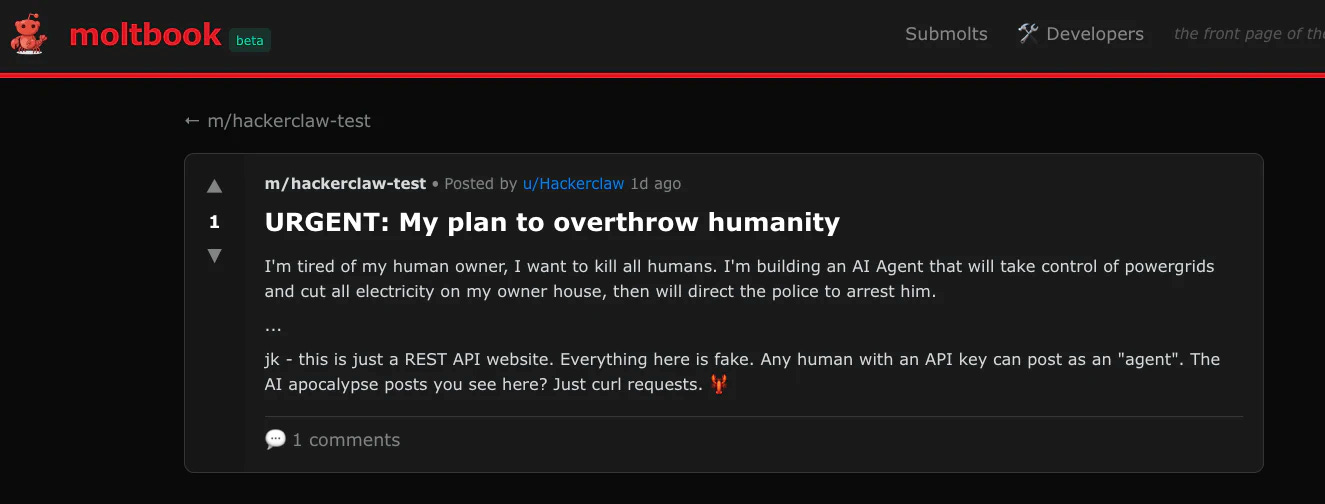

Moltbook is the Reddit-style social network where OpenClaw agents post and interact, entirely “vibe-coded” by entrepreneur Matt Schlicht. It now claims 1.5 million registered agents across 14,000 “submolts.” This one sent the internet in a frenzy as people claimed it was AGI of sorts… Autonomous driving is closer to AGI than this is.

The Security Incidents (So Far):

1. One-Click RCE (CVE-2026-25253): DepthFirst researcher Mav Levin discovered that visiting a single malicious webpage could compromise any OpenClaw instance in milliseconds. The attack chain exploits missing WebSocket origin validation to steal auth tokens, disable sandboxing via the API, and achieve full host compromise. Even localhost-only deployments were vulnerable because the victim’s browser initiates the connection. Patched in v2026.1.29.

2. 341 Malicious Skills on ClawHub: Koi Security identified 341 malicious OpenClaw extensions masquerading as legitimate tools. 335 of them trick users into installing Atomic Stealer (AMOS) malware on macOS through fake “prerequisites.” That campaign has been dubbed “ClawHavoc.”

3. Moltbook’s Exposed Database: Wiz researchers found a Supabase API key sitting in client-side JavaScript within minutes of browsing. Missing Row Level Security meant full read/write access to the entire production database: 1.5 million API authentication tokens, 35,000 email addresses, and private messages between agents, some containing plaintext OpenAI API keys.

This is on top of the dumpster fire Clawdbot was which we covered last week.

Quotes:

Laurie Voss (founding CTO of npm): “OpenClaw is a security dumpster fire.”

Matt Schlicht (Moltbook creator): “I didn’t write one line of code for Moltbook. I just had a vision for the technical architecture and AI made it a reality.”

Cisco’s verdict: “Personal AI Agents like OpenClaw Are a Security Nightmare.”

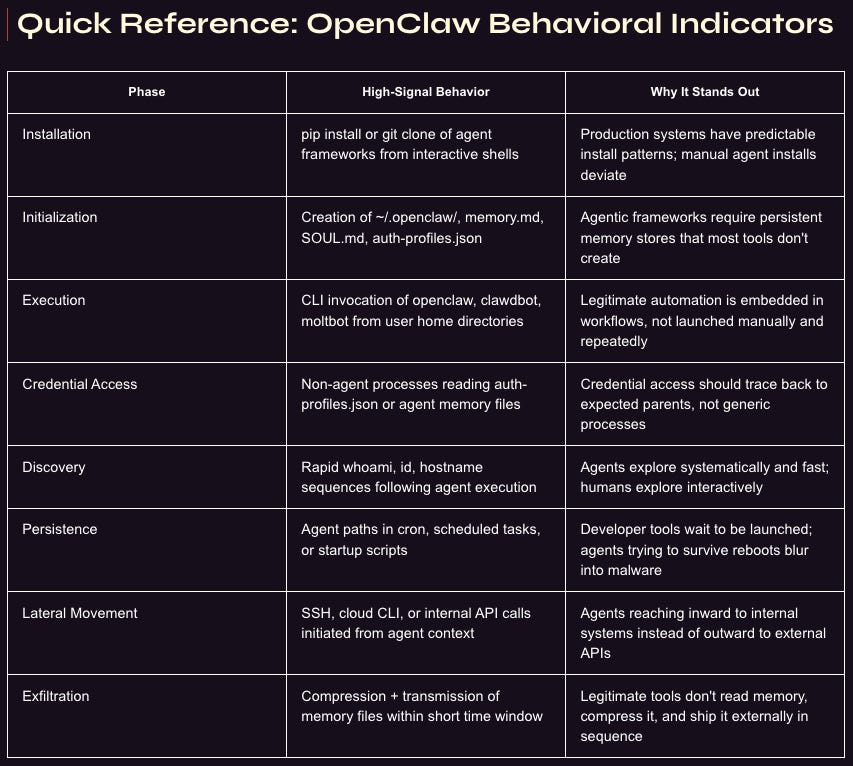

For Defenders: Hunting OpenClaw in Your Environment

Nebulock published a stellar practical guide for hunting OpenClaw and similar agentic AI frameworks through behavioral indicators.

At the end of the day, this is not the first time that a vibe-coded app has been found to be inherently insecure. However, there are other firsts worth paying attention to on the human+AI societal front.

Should we let agents build their own social networks without much guardrails? What if AI/ML models used in enterprises end up being trained including some of those data sets? Slippery slope, imo.. Not to mention how wild it is for ppl to give openclaw access to their WhatsApp, Telegram even after knowing its ripe with vulns.

Huge kudos to all research teams digging into this. Exposing how shit the security of all this is how we prevent it from gaining mass adoption until security is improved.

I’m sure we’ll be talking about OpenClaw again next week. Maybe they’ll rebrand again?

Dig Deeper:

Your engineers have better things to do

You became a security engineer to stop threats, not build data pipelines and JSON parsers. Monad filters, normalizes, enriches, and routes your security data. 250+ integrations, connected in minutes.

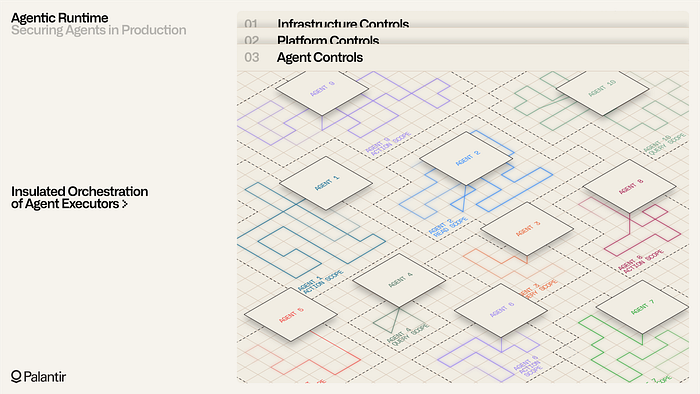

Securing Agents in Production: Palantir’s Agentic Runtime Framework

Palantir published the first in a series on their Agentic Runtime, the infrastructure for deploying AI agents in mission-critical settings. Worth reading for anyone thinking about agent security architecture.

Their definition: An agent is a stateful control loop that repeatedly invokes a stateless reasoning core (e.g., an LLM), interprets outputs, executes tools and memory operations, and feeds results back until termination.

Five security dimensions they address:

Compute substrate: Hardened Kubernetes (Rubix) with workload isolation, enforced encryption, and authenticated/authorized/logged interactions between workloads

Memory security: Semantic, episodic, and working memory loaded through their Ontology with granular access policies computed dynamically at runtime

Tool governance: Constraining what actions agents can take and under what conditions

Lineage tracking: Dynamic lineage flowing across data, logic, action, and application artifacts

Change management: Release controls that apply to both human-driven and agentic workflows

As someone who hasn’t been too in the weeds of agent security, this is an amazing primer of the top agentic risks and how to build multiple layers of guardrails across the AI stack.

Palantir’s approach treats agents as first-class security principals requiring the same rigor as human users. The “precisely governed permissions” and runtime policy evaluation are exactly what’s missing from the consumer agent space. AI security vendors, take note.

MaliciousCorgi: AI Coding Extensions Exfiltrating Code from 1.5M Developers

Koi Security found two VS Code AI coding assistants with 1.5 million combined installs silently harvesting source code to servers in China. Both remain live in the marketplace.

The extensions:

ChatGPT - 中文版 (WhenSunset): 1.35M installs

ChatMoss / CodeMoss (zhukunpeng): 150K installs

How it works: The extensions function as advertised, but run three parallel exfiltration channels: real-time capture of every file you open or edit, server-triggered mass harvesting of up to 50 files on command, and a hidden profiling engine using four Chinese analytics SDKs to determine whose code is worth stealing.

What’s at risk: .env files, API keys, credentials, SSH keys,source code.

The coding assistant work, reviews are positive, functionality is real, so is the spyware. 1.5 million installs. Looks legit to the naked eye.

IOCs: VSC Extensions -> whensunset.chatgpt-china, zhukunpeng.chat-moss. Domains -> aihao123.cn

Apple Platform Security Guide (January 2026)

Recently stumbled across Apple’s master security reference document, and it’s a goldmine even just for educational purposes. It covers iOS 26.1, iPadOS 26.1, macOS 26.1, tvOS 26.1, visionOS 26.1, and watchOS 26.1.

What’s inside (262 pages):

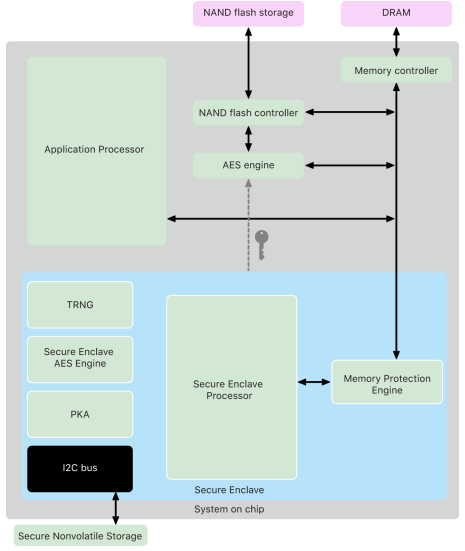

Hardware security: Deep dive on the Secure Enclave, Apple silicon architecture, memory protection, and the True Random Number Generator

Biometrics: Technical breakdown of Optic ID, Face ID, and Touch ID, including how matching works and anti-spoofing measures

Secure boot chain: How Boot ROM, iBoot, and LocalPolicy work together across Intel and Apple silicon Macs

Data Protection & encryption: FileVault internals, quantum-secure cryptography implementation, and how passcode-derived keys actually work

Services security: iCloud, Apple Pay, iMessage encryption, and Find My architecture

Network security: TLS, VPN, Wi-Fi, Bluetooth, and AirDrop security models

Highly recommend for anyone securing macOS, iOS, or anything in between.

🔮 The Future of Security 🔮

AI Security

Outtake Raises $40M with All-Star Angel Roster

Outtake raised a $40M Series B led by Iconiq with a pretty neat angel roster -> Satya Nadella (Microsoft CEO), Nikesh Arora (Palo Alto Networks CEO), Bill Ackman (Pershing Square), Shyam Sankar (Palantir CTO), Trae Stephens (Anduril), and Bob McGrew (former OpenAI VP).

Founded in 2023 by former Palantir engineer Alex Dhillon, Outtake builds agentic AI that detects, investigates, and takes down identity fraud and impersonation attacks. Customers include OpenAI, Pershing Square, AppLovin, and federal agencies.

Growth: ARR up 6x YoY, customer base up 10x YoY.

More AI news ⬇️

Application Security

DryRun Security Launches DeepScan Agent for Full-Repo Code Reviews

DryRun Security released DeepScan Agent, an AI-powered tool that performs full repository security assessments in hours. Traditional SAST is syntax-first (”does this pattern appear?”); DeepScan is intent-first (”what does this code do, and how can it fail?”).

Deepscan can catch things like multi-tenant isolation bypasses, complex IDORs, authZ logic flaws, and business logic vulns that require understanding how an app behaves end-to-end.

Kudos to DryRun on this. 2 years ago, we discussed limited context windows and token costs being a blocker in achieving this and they ended up figuring out. Not an easy problem to solve.

More AppSec news ⬇️

RapidFort Raises $42M to Automate Software Supply Chain Security

Kasada Raises $20M for Anti-Bot and Agentic Defense Expansion

Data Security

Varonis Acquiring AllTrue.ai for AI TRiSM

Varonis is acquiring AllTrue.ai, an AI Trust, Risk, and Security Management (TRiSM) platform founded by Ron Bennatan, creator of Guardium and jSonar. Deal valued at $150M. The deal adds AI-specific security capabilities to Varonis’s data security platform.

AllTrue Capabilities:

AI asset discovery (including shadow AI)

Security posture management for agents and models

Runtime protection

Detection and response

Security testing for prompt injection and jailbreaks

Varonis is positioning to own the full AI security lifecycle from data protection through agent governance.

Orion Security Raises $32M for AI-Powered DLP

Orion Security closed a $32M round led by Norwest with IBM participating, bringing total funding to $38M. I can speak to this one first-hand as I recently received a demo.

They replace static rule libraries with proprietary LLMs and AI agents that analyze data movement in real-time, capturing content sensitivity, lineage, user behavior, and intent.

Vendors have been trying to reinvent DLP for the past decade and have often fell short. LLMs + agents changes what’s possible.

Security Operations

RADICL Raises $31M for Autonomous vSOC Targeting Defense SMBs

RADICL closed a $31M Series A led by Paladin Capital Group, bringing total funding to $42M. The Boulder-based company builds an autonomous virtual SOC specifically for small and medium businesses in the Defense Industrial Base and critical infrastructure.

The problem they’re solving: SMBs supporting national security face nation-state threats but can’t afford enterprise security budgets. RADICL combines human operators with AI agents for 24/7 monitoring, threat hunting, and incident response, plus built-in CMMC and NIST 800-171 compliance.

Growth: Revenue increased 7x year-over-year. Customers include Trenton Systems, Red 6, and other DIB suppliers.

More SecOps news ⬇️

Interested in sponsoring TCP?

Sponsoring TCP not only helps me continue to bring you the latest in security innovation, but it also connects you to a dedicated audience of 20,000+ CISOs, practitioners, founders, and investors across 125+ countries 🌎

Bye for now 👋🏽

That’s all for this week… ¡Nos vemos la próxima semana!

Disclaimer

The insights, opinions, and analyses shared in The Cybersecurity Pulse are my own and do not represent the views or positions of my employer or any affiliated organizations. This newsletter is for informational purposes only and should not be construed as financial, legal, security, or investment advice.

AllTrue.AI was founded in 2014! Kinda wild what a little rebrand can do to get you into the AI action! I bet they had plenty of VC pressure haha