TCP #120: Opus 4.6 uncovers 500 vulns; 50K forecasted in 2026; and RSAC Innovation Sandbox Announced

What's hot in security🌶️ | Feb 4th '26 - Feb 12th '26

Welcome to The Cybersecurity Pulse (TCP)! I'm Darwin Salazar, Head of Growth at Monad and former detection engineer in big tech. Each week, I bring you the latest security innovation and industry news. Subscribe to receive weekly updates! 📧

AI has changed email attacks - but most defenses haven’t

Modern phishing blends text, images, links, QR codes, and social engineering to bypass legacy tools.

Varonis offers a free Email Risk Assessment to show the real threats already landing in your users’ inboxes. Get a 90-day lookback, live attack visibility, and a clear report - all backed by real production data.

See what your email security is missing.

Howdy 👋 - What a week it’s been. Six weeks out from RSAC and the industry is already running hot with tons of rumbling underneath the surface.

Innovation Sandbox finalists dropped, earnings are rolling in, and every vendor on the planet is sharpening their pitch decks. It’s a lot. Lucky for you, I read it all so you don’t have to.

This week’s issue is stacked (!) but two quick plugs before we dive in:

🕸️ The Black Hills Information Security/Antisyphon Training crew and John Strand are hosting a free, virtual SOC Summit on March 25 (10AM-4PM ET) with 11 expert speakers covering real-world SOC operations. Highly recommend if you’re a SecOps operator in any capacity.

📝 I also dropped a new post this week: The Great Decoupling: Separating Your SIEM from Your Data Layer. It’s a great snapshot of architectural discussions many teams are having.

Cool, let’s dive in!

TL;DR 🗞️

🤖 Opus 4.6 Finds 500+ High-Severity Vulns — Anthropic's model discovered novel flaws in open-source libraries with no custom tooling or specialized prompting

🏆 RSAC Innovation Sandbox 2026 — 10 finalists announced, $5M each; 6 of 10 building for agentic AI security

🧠 AI Burnout Is Already Here — UC Berkeley study: AI tools didn’t reduce hours; to-do lists expanded to fill freed time

🚨 Google: APTs Using Gemini AI Across Full Kill Chain — First malware families calling LLMs at runtime; 100K+ prompt distillation attack on Gemini; mirrors Anthropic's finding that AI is now the operator, not just the tool

📊 Cloudflare Crushes Q4 Earnings — $614.5M revenue, up 34% YoY; largest contract ever at $42.5M/year

🐛 FIRST Projects 50K+ CVEs in 2026 — Median forecast of 59,427 new CVEs; first year crossing the 50K threshold

💰 Vega Security Raises $120M Series B — AI-native SIEM alternative valued at $700M; Fortune 500 logos already signed

🤖 Ex-GitHub CEO Raises Record $60M Seed — Thomas Dohmke’s Entire valued at $300M; open-source Checkpoints tool captures AI code provenance

🔐 Reco Raises $30M Series B — AI SaaS security platform fueled by 500% YoY growth; $85M total raised

🛡️ Nucleus Security Raises $20M Series C — Exposure management platform ingesting from 200+ tools across IT, cloud, appsec, and OT

🎯 Complyance Raises $20M Series A — GV-led round for AI-native GRC with 16 purpose-built agents live today

🔬 Microsoft Drops Dual LLM Security Research — Backdoor scanner for open-weight models plus single-prompt unalignment across 15 model families

🌐 Wiz Ships Backstage Plugin — Security findings now surfaced inside Spotify’s developer portal at GA

🧰 THOR Collective: How I Use LLMs for Security Work — Josh Rickard’s practical guide to prompting LLMs for SOC analysts and threat hunters

⚒️ Picks of the Week ⚒️

Anthropic’s Opus 4.6 Discovers 500+ High-Severity Vulns in Open-Source Libraries

Anthropic’s Red Team dropped a bombshell along with their latest model release. During testing, Claude Opus 4.6 identified over 500 previously unknown high-severity vulnerabilities across open-source software libraries.

500 novel findings in codebases that have had fuzzers running against them for years, some with bugs that went undetected for decades… Wild.

The model was pointed at open-source projects and given standard vulnerability analysis tools, but received no custom scaffolding, no specialized prompting, and no instructions on how to find bugs. It figured out its own methodology, including reading Git commit histories to find similar unpatched flaws and reasoning through compression algorithms to craft proof-of-concept exploits. Out-of-the-box capability, not months of fine-tuning.

“It’s a race between defenders and attackers, and we want to put the tools in the hands of defenders as fast as possible.” - Head of Red Team at Anthropic, Logan Graham

For security teams running open-source dependencies (pretty much everyone), the window between discovery and exploitation just got shorter.

Dig Deeper: Anthropic Frontier Red Team Report | Fortune | Axios

FIRST Forecasts 50,000+ CVEs in 2026

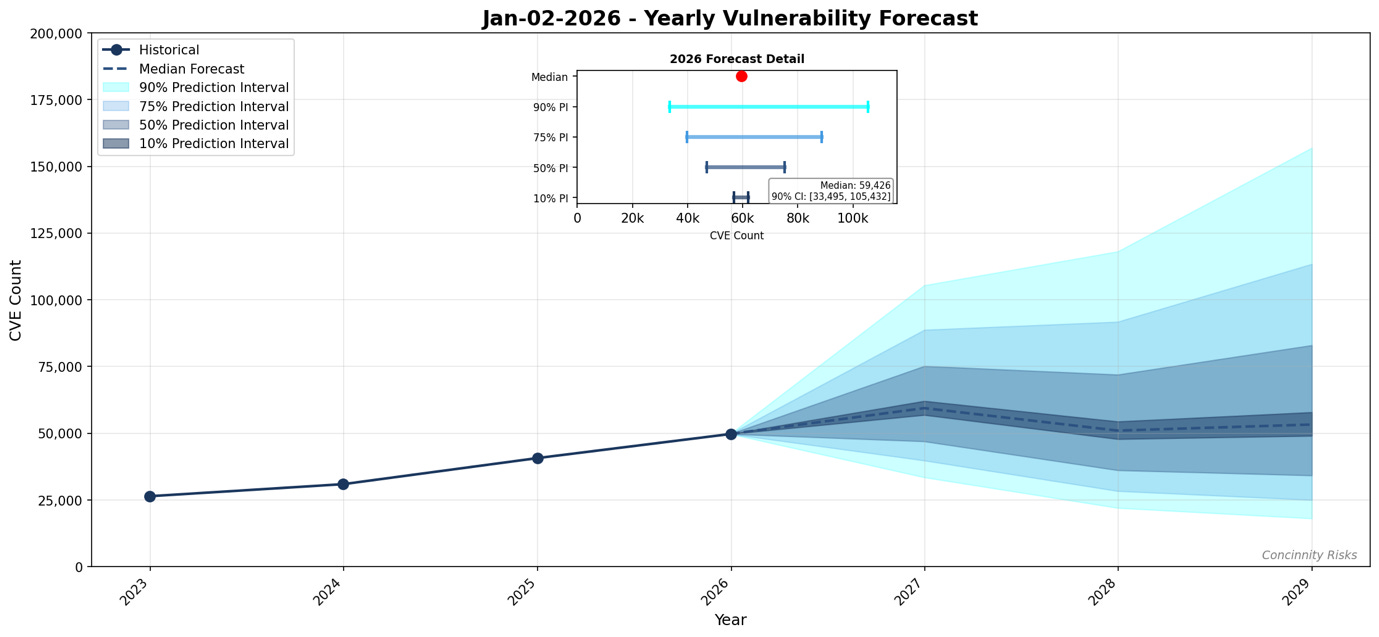

FIRST’s 2026 Vulnerability Forecast projects a median of roughly 59,427 new CVEs this year, with a 90% confidence interval ranging from 30,000 to 117,000. If the median holds, 2026 will be the first year to cross the 50,000 CVE threshold, a milestone FIRST calls “significant in vulnerability disclosure history.” Realistic scenarios push the number as high as 70,000 to 100,000.

FIRST’s methodology, built on historical CVE records and NVD/MITRE publication trends, hit a 7.48% error rate on 2025 yearly predictions and 4.96% for Q4 2025. It also projects continued growth: median estimates of 51,018 CVEs in 2027 and 53,289 in 2028, with upper bounds approaching 193,000 by 2028.

My first reaction is “shit, that’s a lot of vulns”. Second reaction is this is extremely validating for vendors doing any sort of vulnerability prioritization. Third reaction is that this ties well into the Anthropic story we just covered above.

RSAC 2026 Innovation Sandbox: 10 Finalists, $5M Each, and a Very Clear Theme

RSAC announced the 10 finalists for its 21st Innovation Sandbox contest, each awarded a $5M SAFE investment ahead of three-minute pitches at Moscone on March 23.

The Class of 2026: Charm Security, Clearly AI, Crash Override, Fig Security, Geordie AI, Glide Identity, Humanix, Realm Labs, Token Security, and ZeroPath.

6 of 10 finalists are building for agentic AI security, AI governance, or human-layer defense.

The standouts for me are Realm Labs (inference-time observability is a genuinely unsolved problem), Geordie AI (agent governance with serious pedigree from Snyk, Veracode, and Darktrace founders, plus the Black Hat Europe 2025 Startup Spotlight win), and Glide Identity (SIM-anchored cryptographic auth is a fundamentally different approach than MFA).

I’m working on a standalone deep dive breaking down each finalist, what they actually do, and who has a real shot. Stay tuned.

Dig Deeper: RSAC Finalists Announcement

Google: State-Backed Hackers Caught Using Gemini Across the Full Kill Chain

Google’s Threat Intelligence Group (GTIG) released its latest AI Threat Tracker, and it validates what many of us have known for a while + was covered by anthtropic in November 2025. Adversaries are deploying AI-enabled malware in live operations.

APT groups from China, Iran, North Korea, and Russia are using Gemini for reconnaissance, phishing, C2 development, and data exfiltration.

GTIG identified two malware families, PROMPTFLUX and PROMPTSTEAL, that call LLMs during execution.

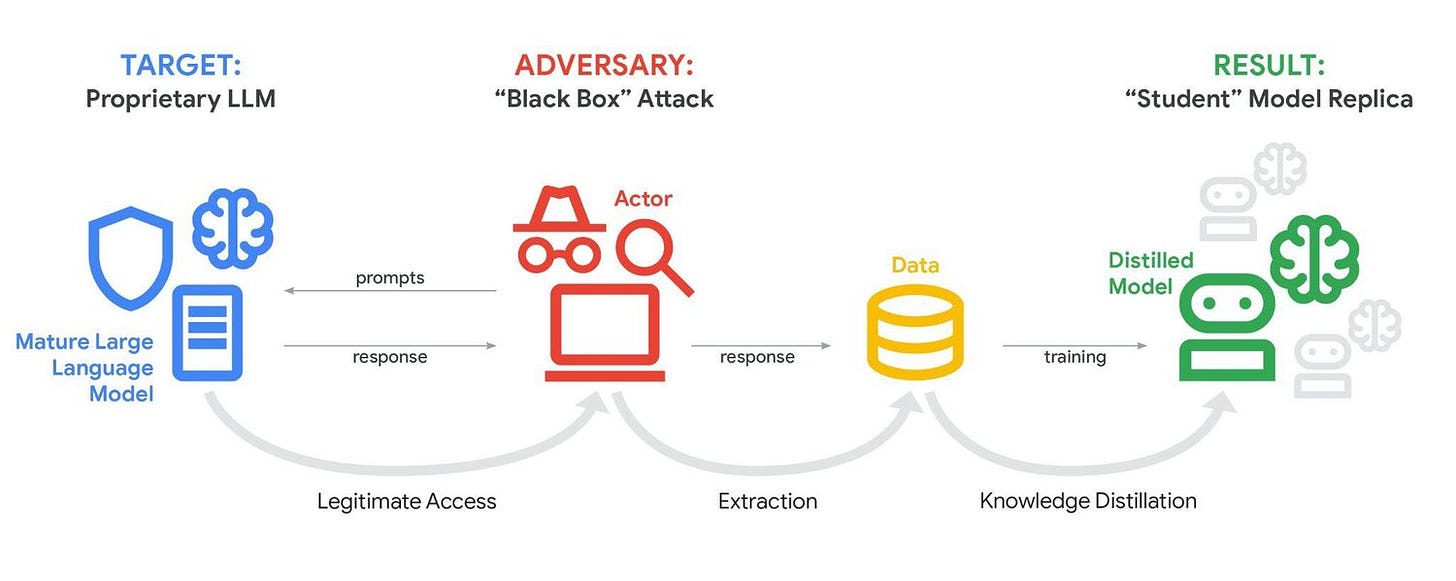

GTIG also flagged a surge in “distillation attacks.” One campaign hit Gemini with 100,000+ prompts attempting to extract reasoning traces.

The Anthropic parallel tracks exactly. In November 2025, Anthropic disrupted a Chinese state-sponsored group using Claude Code as an autonomous penetration orchestrator, with AI executing 80-90% of operations independently. I’d imagine there’s overlap in these threat actors and that they’re working for govts.

None of this is surprising but it’s certainly worth tracking.

Dig Deeper: GTIG AI Threat Tracker | Anthropic: AI-Orchestrated Espionage (Nov 2025) | BleepingComputer

The First Signs of AI Burnout Are Here, and Security Teams Should Be Paying Attention

A new study published in Harvard Business Review tracked what happened when employees at a 200-person tech company genuinely embraced AI tools over eight months. UC Berkeley researchers found nobody worked less.

To-do lists expanded to fill every hour AI freed up, then kept going. Separately, a METR trial found experienced developers using AI tools took 19% longer on tasks while believing they were 20% faster. An NBER study across thousands of workplaces measured actual productivity gains at just 3%.

Sound familiar? Vendors promise AI will finally solve SOC analyst and incident response burnout, alert fatigue, and the eternal “staffing gap.” The tools are getting genuinely better, and the right AI tooling on top of a well-architected SOC can be a real force multiplier. But if the pattern from this research holds, “faster” just means leadership expects more coverage, more investigations, more reporting, not fewer hours. The alert queue may shrink faster. You’ll detect and address threats sooner. But the roles will evolve to cover more ground, and operators should plan for that.

AI won’t save us from bad architecture, understaffed teams, or alert pipelines that were broken before the LLM showed up.

Cybersecurity Earnings Season Opens Strong: Cloudflare, Fortinet, Qualys All Beat

Early returns from Q4 earnings season are painting a healthy picture for cybersecurity and infrastructure spending.

Cloudflare stole the spotlight: $614.5M in Q4 revenue (up 34% YoY), crushing the Street’s $591.3M estimate. CEO Matthew Prince disclosed the company’s largest contract ever at $42.5M/year (🤯).

Fortinet posted $1.91B in Q4 revenue (up 15% YoY) with billings surging 18% to $2.37B and product revenue up 20%, suggesting the firewall refresh cycle is alive and well even though Fortinet has had a slew of critical vulnerablities over the past year+… Seems like their product is pretty sticky for vulns not to cause churn? Either way, they’re doing something very right.

Rapid7 posted $217.4M in Q4 revenue (up just 0.5% YoY). Full-year 2025 revenue hit $860M (up 2% YoY) with ARR flat at $840M. Sounds like they’ll be making a strong push for services/MDR in coming quarters.

Qualys delivered $175.3M in Q4 revenue (up 10% YoY)

Dig Deeper: Cloudflare Q4 Results | Fortinet, NetScout, Qualys Q4 Results | Rapid7

Vega Security Raises $120M Series B to Kill Legacy SIEM Model

Friends of the newsletter Vega Security closed a $120M Series B led by Accel with Cyberstarts, Redpoint, and CRV, nearly doubling their valuation to $700M and bringing total funding to $185M.

Vega is building a SecOps suite that adds federated detection and response capabilities across cloud services, data lakes, and existing storage, giving teams flexibility in how they query and act on security data at scale.

Approach seems to be resonating with recent multi-million-dollar contracts with banks, healthcare companies, and Fortune 500 firms including Instacart. Also worth noting that Vega has scaled to 100 employees in ~2 years since inception.

TCP readers got the early look as we covered Vega six months before they came out of stealth.

🔮 The Future of Security 🔮

AI Security

Microsoft dropped two significant AI security research posts in one week. The first introduces a practical scanner for detecting backdoored open-weight language models at scale. The research identifies three “signatures” in poisoned models: trigger tokens hijack the model’s attention mechanism, creating a distinctive pattern that’s measurably different from clean models. Builds on Anthropic’s earlier “sleeper agents” work showing safety post-training fails to remove embedded backdoors.

The second paper is arguably more alarming: a single unlabeled prompt was enough to reliably strip safety alignment from 15 open-weight models across DeepSeek, GPT-OSS, Gemma, Llama, Ministral, and Qwen families, with minimal loss of utility. The technique reverses the same methods used to improve model safety.

Two takeaways: open-weight model supply chains need integrity scanning before deployment, and current alignment techniques remain not the best. If you’re running open-weight models in production, both papers are required reading, imo.

More AI news ⬇️

Application Security

Ex-GitHub CEO Raises $60M Seed to Tackle AI Code Provenance

Former GitHub CEO Thomas Dohmke launched Entire with a $60M seed round at a $300M valuation, led by Felicis with Madrona, M12 (Microsoft), Basis Set, Jerry Yang, and Datadog CEO Olivier Pomel.

The company is building AI-native developer infrastructure to solve a growing problem: as AI agents write more production code, engineering teams can’t review it fast enough or trace why decisions were made.

What it does:

Checkpoints, an open-source CLI that captures full context behind AI-generated code: prompts, transcripts, agent decision steps, implementation logic

Git-compatible database unifying AI-produced code with a semantic reasoning layer for multi-agent collaboration

Supports Claude Code and Gemini CLI today

The security angle here is supply chain integrity for AI-generated code. If your agents are shipping code faster than humans can audit it, provenance tracking is crucial. Worth watching as AI coding tools move from “assist” to “author.”

More AppSec news ⬇️

Browser Security

Zscaler Acquires Browser Security Firm SquareX

Zscaler acquired SquareX, an 80-person browser detection and response (BDR) startup that raised $26M including a $20M Series A led by SYN Ventures. SquareX’s lightweight browser extension delivers zero trust enforcement, DLP, and device posture checks in Chrome, Edge, Firefox, and Safari without requiring a standalone enterprise browser or full agent.

More BrowserSec news ⬇️

Governance, Risk, and Compliance

Complyance Raises $20M Series A for AI-Native GRC

Complyance closed a $20M Series A led by GV, with Speedinvest, Everywhere Ventures, and angels from Anthropic and Mastercard participating. Total raised sits at $28M. The platform deploys AI agents to automate governance, risk, and compliance workflows, running continuous checks against company-specific criteria and risk thresholds instead of the traditional quarterly audit cycle.

Identity & Access Management

Keycard Labs Acquires Anchor.dev to Build Identity Layer for AI Coding Agents

Keycard Labs acquired certificate management startup Anchor.dev, bringing on a team with infrastructure experience from Cloudflare, GitHub, and Heroku. Keycard provides short-lived, task-based credentials for coding agents (Cursor, Claude Code, Codex, Windsurf) accessing production systems, with a single identity and audit layer across MCP, CLI, and agent-created tooling. As agentic dev tools get deeper production access, expect identity and access governance to become the bottleneck everyone scrambles to solve.

Product Security

Nullify Raises $12.5M Seed for AI-Powered Product Security Workforce

Nullify closed a $12.5M seed led by SYN Ventures with Black Nova Venture Capital, bringing total raised to $16.9M. The company pitches autonomous “AI employees” that handle vuln detection, triage, exploit validation, and remediation end-to-end, shipping merge-ready fixes directly to developers.

SaaS Security

Reco Raises $30M Series B for AI SaaS Security

Reco closed a $30M Series B led by Zeev Ventures, bringing total funding to $85M. The round comes less than 10 months after its last raise, fueled by 500% YoY growth in 2024 and an additional 400% in 2025. The platform provides continuous discovery and governance across AI SaaS apps, autonomous agents, shadow AI, and MCP connections, covering 215+ integrations. Customers include Fortune 500s across finance, healthcare, pharma, and tech.

SaaS security was already a top concern but enterprise AI adoption has poured gas on that and Reco is capitalizing on it.

Security Operations

How I Use LLMs for Security Work - THOR Collective Dispatch

Josh Rickard walks through six practical prompting techniques for security practitioners using Claude, Cursor, and ChatGPT: role-stacking (layering multiple perspectives per prompt), being explicit about your tech stack, requesting validation to reduce hallucinations, and framing questions as systems problems rather than point solutions.

Great primer for analysts and threat hunters experimenting with their LLM workflows.

AiRRived Emerges from Stealth with $6.1M for Agentic OS

AiRRived launched from stealth with a $6.1M seed led by Cannage Capital, with Plug and Play Ventures, Rebellion Ventures, and Inner Loop Capital participating. Airrived builds an “Agentic OS” that unifies SOC, GRC, IAM, vuln management, IT, and business operations into a single agentic layer.

I think their approach is cool. Goes toe-to-toe with the Palo Alto’s and CrowdStrike’s of the world whose moat has dwindled a bit given AI development advances. However, large players are mostly safe for now given their biggest moats are talent density and distribution channels.

More SecOps news ⬇️

Vulnerability Management

Nucleus Security Raises $20M to Scale Exposure Management Platform

Nucleus Security closed a $20M Series C led by Delta-v Capital with Arthur Ventures, bringing total funding to roughly $86M. The platform acts as a centralized system of record for vulnerability and exposure management.

Interested in sponsoring TCP?

Sponsoring TCP not only helps me continue to bring you the latest in security innovation, but it also connects you to a dedicated audience of 20,000+ CISOs, practitioners, founders, and investors across 125+ countries 🌎

Bye for now 👋🏽

That’s all for this week… ¡Nos vemos la próxima semana!

Disclaimer

The insights, opinions, and analyses shared in The Cybersecurity Pulse are my own and do not represent the views or positions of my employer or any affiliated organizations. This newsletter is for informational purposes only and should not be construed as financial, legal, security, or investment advice.