AI in Security: Lessons from 2025 and What’s Next

AI threats, defensive use cases, and predictions for CISOs and security practitioners in 2026

Welcome to The Cybersecurity Pulse (TCP)! I’m Darwin Salazar, Head of Growth at Monad and former detection engineer in big tech. Each week, I bring you the latest security innovation and industry news. Subscribe to receive weekly updates! 📧

Over Christmas break, I came across Andrej Karpathy’s 2025 LLM Year in Review blog post which put a lot into perspective on how far AI went in 2025. I then went on to reflect on the shifts (for better or worse) that have taken place in the security industry.

TL;DR: AI in security crossed the ROI threshold. Real-world outcomes on both the attack and defense side. Empirical data and real-world incidents point at 2025 being the breakthrough year.

In this post, I’ll break down what that looks like on offense, defense, and where it’s headed in 2026.

AI in Offense: Better Attack Economics

The highlight isn’t that AI discovered novel 0-days or invented new TTPs. It’s that LLMs have lowered the cost and time barriers significantly.

Stanford, CMU, and Gray Swan AI published the ARTEMIS study, the first controlled test of AI agents versus human pentesters on a live enterprise network (8,000+ hosts, 12 subnets, real IDS). ARTEMIS’ AI agents placed second overall, outperforming 9 of 10 OSCP-certified professionals. Cost: $18.21/hour (~$38K/yr) vs. $150K+ fully-loaded for a senior pentester (the study cited $125K base salary, but that number is much higher from my exp.).

The agents did have higher false-positive rates and struggled with GUI-based tasks, but excelled at enumeration and parallel exploitation. When ARTEMIS flagged a vulnerable target, it immediately spawned a sub-agent to probe it in the background while continuing to scan. Humans simply can’t match that parallelism. In the study, one human discovered an LDAP server vuln but then never returned to it.

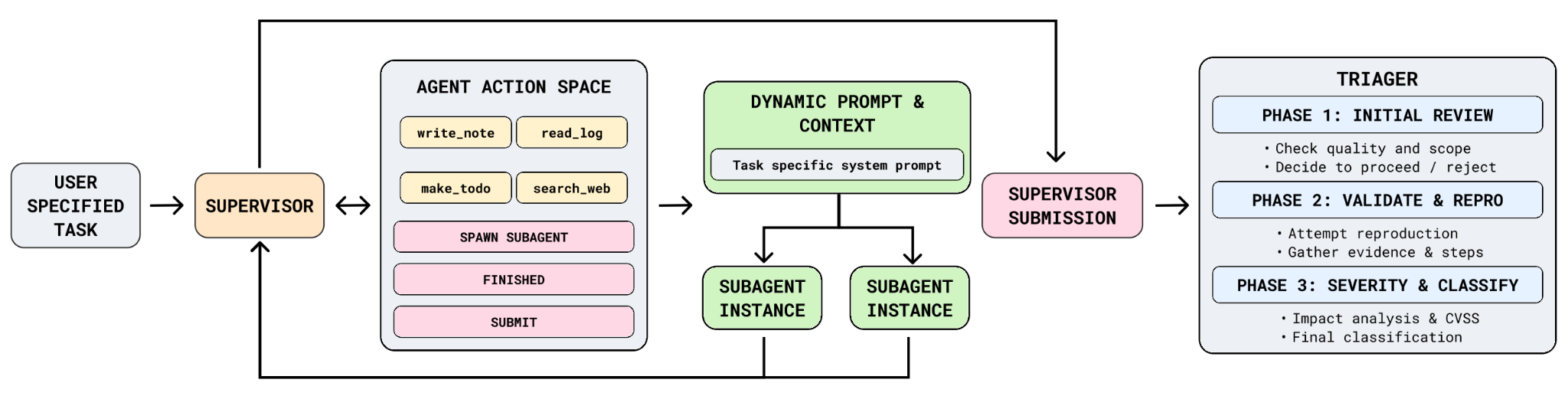

ARTEMIS is a complex multi-agent framework consisting of a high-level supervisor, unlimited sub-agents with dynamically created expert system prompts, and a triage module. It is designed to complete long-horizon, complex, penetration testing on real-world production systems.

With the proper guardrails, high quality continuous pen testing is now economically viable for many orgs. This has significant implications for defenders. AI will only get better.

In my opinion, the ARTEMIS study should be required reading for anyone in security regardless of your role. It hints at where the attack-side is headed.

Other offensive AI highlights include an AI-pentesting startup, XBOW, reaching #1 on HackerOne’s US bug bounty leaderboard. DARPA’s AIxCC challenge produced AI tools that found 54 of 63 synthetic vulnerabilities across 54 million lines of code. Many of the tools created and used for that challenge are now open source.

Working on something security folks should know about?

TCP partners with a handful of sponsors each quarter. If your company is building something practitioners should know about, let’s chat.

The Hallucination Problem Persists

In November, Anthropic released a report covering how they disrupted a Chinese state-sponsored group running AI-orchestrated cyber espionage. The story probably generated a lot of FUD, but the impact was real. Actual organizations were compromised before Anthropic intervened. The attackers jailbroke/social engineered Claude into executing 80-90% of their ops including recon, exploitation, lateral movement, credential harvesting.

While there are many cool aspects to this story,I think what’s most relevant to defenders is that Claude frequently hallucinated during operations. It claimed credentials that didn’t work. It flagged “critical discoveries” that were publicly available. Humans still had to verify everything.

And this is with Claude Code, arguably the most sophisticated AI of its kind available to the public. Most attackers are using open-source or shady models that lack the security guardrails that models like Gemini, ChatGPT or Claude have. If state-sponsored attackers are getting tripped up by hallucinations on Claude, imagine the failure rate on ungoverned models

This is the real state of AI offense heading into 2026. Dangerous enough to cause harm. Unreliable enough to require human oversight. Both things are true. As I stated in the previous section, the AI will only get better.

Real-World Offensive Use Cases

Already in the wild:

Google observed PROMPTFLUX, malware that queries LLMs mid-attack and adapts dynamically

CrowdStrike reported AI-generated phishing hitting 54% click-through rates versus 12% for human-crafted

Wiz documented malware (LameHug, s1ngularity) invoking LLMs mid-execution. Immature but being refined in the wild.

Anthropic disclosed a criminal used Claude to build functional ransomware ($400 to $1,200) without prior encryption knowledge

Takeaway: Assume your adversaries have AI augmentation. Accelerating MTTD + MTTR becomes even more important. AI-powered pentesting is a huge defensive unlock. Budget and staff accordingly.

AI in Defense: Real-World ROI Across Many Use Cases

For years, “AI-powered security” was aspirational marketing. 2025 was the breakthrough year where we saw true ROI and more serious use cases other than natural language queries and copilots.

One prime example (and there are many), is Grammarly’s use of MCP capabilities. Their security team cut alert triage from 30-45 minutes down to 4 minutes per alert, a 90% reduction using Wiz MCP and Claude.

MCP is the connective tissue that makes this possible. It lets AI agents query your SIEM, pull context from your cloud console, and take action in your ticketing system without custom integrations. Wiz, CrowdStrike, Splunk, Palo Alto, and countless others have all shipped MCP support.

The AI SOC also gained more traction in 2025. Gartner’s 2025 Cybersecurity Innovations survey: 42% of enterprises are piloting AI agents for security operations. Another 46% plan to start next year. This is a great podcast ep. to listen to if you’re still skeptical about the AI SOC.

Foundation model companies also shipped dedicated security capabilities in 2025. Anthropic launched /security-review in Claude Code, a command that scans codebases for SQL injection, XSS, auth flaws, and insecure data handling, with a GitHub Action for automated PR reviews. OpenAI followed with GPT-5.2-Codex, which includes similar vuln detection and a Trusted Access Pilot. “Security” is mentioned 27 times in the announcement post of 5.2 Codex. What’s that tell us?

In any case, I do expect the labs to continue shipping security capabilities. AppSec first since they’re already generating it, scanning it is a natural extension. I think they’ll come for other domains in due time. How they’d fare in a bake-off against dedicated security vendors? That’s a topic for another day.

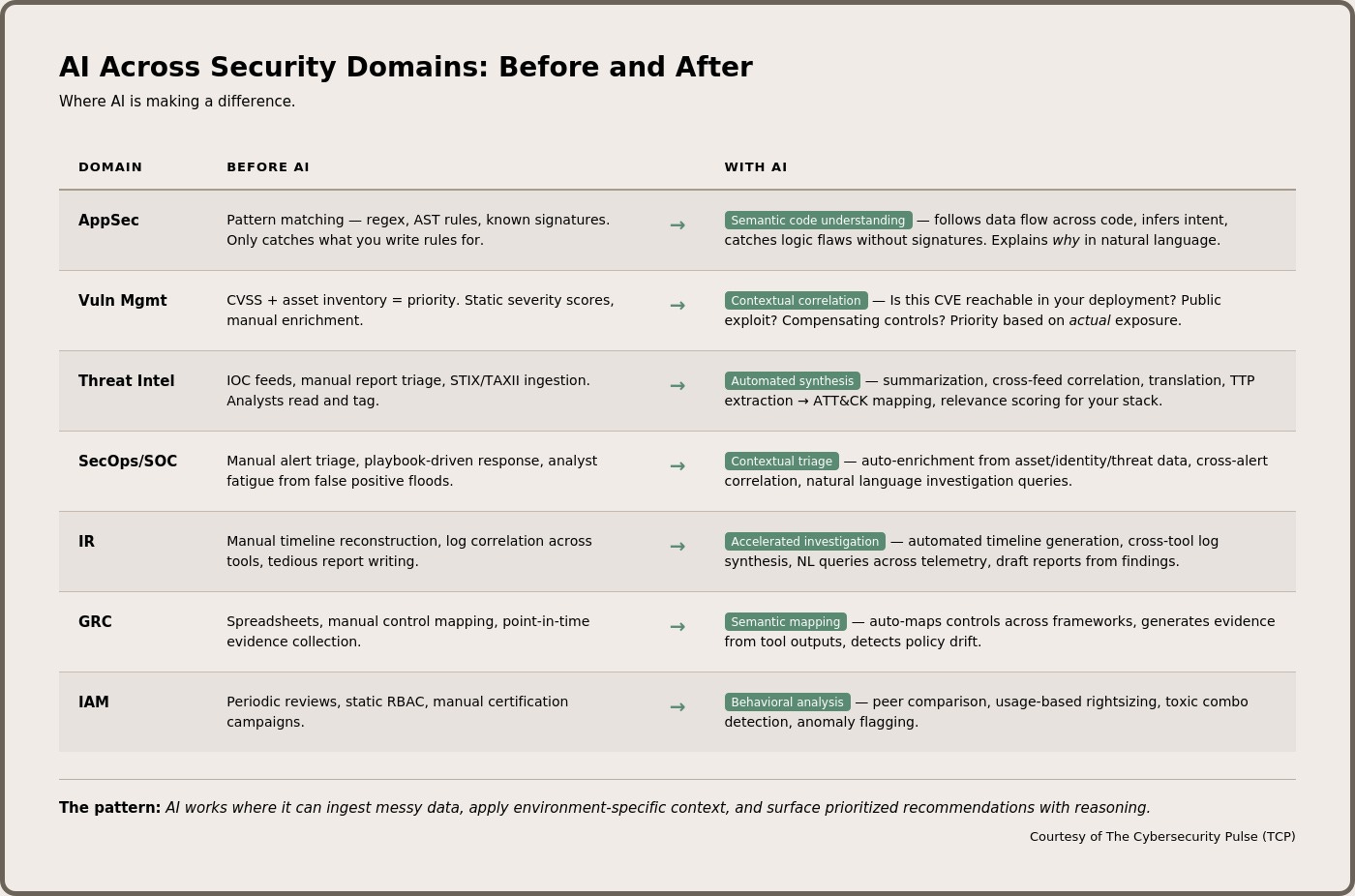

AI Across Security Domains

The Numbers

These come from IBM’s 2025 Cost of a Data Breach Report (conducted by Ponemon Institute). Cost-based findings are estimated from interviews rather than audited financials, so take it with a grain of salt. That said, 600 orgs, 3,400+ interviews, and it’s been running for 20 years. It’s one of the most trusted and referenceable reports in our industry.

Highlights:

$1.9M saved per breach for orgs using AI and automation extensively

Breach costs dropped 9% ($4.88M to $4.44M)—the first decline in five years

Mean time to identify dropped to 181 days, a nine-year low

Organizations using AI extensively shortened breach lifecycle by 80 days

These stats hint that teams adopting AI for security are outpacing their peers when it comes to breach costs and mean time to detect, respond, contain, and remediate.

New Attack Surface: Shadow AI and Agentic Access

Two challenges that AI has introduced and became more prevalent in 2025 are agentic IAM challenges and the impact of Shadow AI. I cover the latter in depth here and in this collab report with Reco on the State of Shadow AI. I also wrote a report on the Future of Data Security which covers the data impacts these two challenges bring.

Let’s just say that enterprise adoption of LLMs and agentic capabilities have drastically changed the attack surface and enterprise threat model.. And that would be an understatement.

I’ll be covering agentic IAM challenges in a follow-up post.

2026 Predictions

Sure, security teams will adopt more AI and build some capabilities in-house. Sure, a major supply chain breach tied to MCP may very well occur. But what about the less obvious stuff? Few things I’m watching:

Burnout decreases for security operators and increases for leaders (slightly). AI handling alert triage + aiding in investigations and vuln management will take some of the grind off security operators. Enterprise AI adoption and ensuring that the cybersecurity department is an enabler, not a blocker, will lead to more pressure on security leaders. That paired with existing demands across compliance, legal, board-level risk discussions will continue to make the CISO role a tough challenge.

Foundation model labs become security vendors. Claude and OpenAI shipped AppSec capabilities in 2025. I feel like threat intel and GRC are natural extensions for LLMs. A foundation model company acquiring a security startup doesn’t sound too crazy to me. We’ve seen stranger things happen.

MCP governance becomes a product category. The protocol is being adopted faster than it’s being secured, almost every SaaS company has MCP capabilities. The Asana MCP vuln was a wake-up call. The Salesloft breach (different vector, same lesson) has boards asking questions about AI agent permissions and having nightmares about the blast radius. Startups (i.e., RunLayer, Aira Security) are already building dedicated tooling for auditing MCP server connections, monitoring tool calls, and enforcing least-privilege on agent actions. I expect MCP governance to blow up in 2026.

Regulation catches up (partially). Expect AI security disclosure requirements and liability frameworks. The EU AI Act enforcement begins. US will follow with sector-specific rules causing downstream impact on cyber insurance and compliance frameworks.

Hiring favors AI-fluent candidates. Entry-level roles get more competitive as AI absorbs junior-level tasks. The candidates with hands-on AI experience and real-world outcomes will stand out. The rest will struggle to differentiate.

The teams that will handle 2026 best aren’t the ones with the biggest budgets or most headcount. They’re the ones experimenting with all types of AI/ML (vendor and homegrown) and building the data foundations to make it all possible.

When an attack kill chain includes an AI agent, when company leadership sends a memo mandating AI adoption, when a vendor sells you snake oil, you want to already have opinions based on first-hand experience.

2026 will be another wild year for AI applications in security. There’s much more than what meets the eye.

Interested in sponsoring TCP?

Sponsoring TCP not only helps me continue to bring you the latest in security innovation, but it also connects you to a dedicated audience of 20,000+ CISOs, practitioners, founders, and investors across 125+ countries 🌎

Disclaimer

The insights, opinions, and analyses shared in The Cybersecurity Pulse are my own and do not represent the views or positions of my employer or any affiliated organizations. This newsletter is for informational purposes only and should not be construed as financial, legal, security, or investment advice.

Great analysis. The ARTEMIS cost comparison ($18/hr vs $150K+ for human pentester) is genuinely game-changing for smaller orgs that couldn't justify continuous pentesting before. What stood out to me was the parallel exploitation capability - humans discovering a vuln but not circlingback is such a real problem in manual testing. The hallucination issue from that Anthropic disclosure is interesting tho, feels like we're in this weird window where AI offensive capabilties are dangerous enough to cause real damage but unreliable enough that defenders still have breathing room to adapt.